The Systems Group at TU Darmstadt

Hello World! The Systems Group at TU Darmstadt is a collaboration of professors from the Computer Science department, pursuing research and teaching in systems topics together.

Core Topics in the Systems Group

News

-

![]()

![]()

Open PhD Position in the Systems Group!

April 28, 2025

Apply now; deadline is May 13th 2025!

-

![]()

![]()

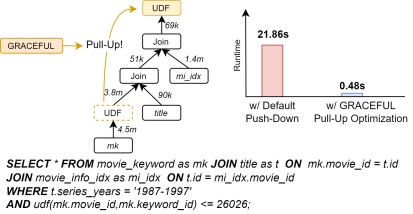

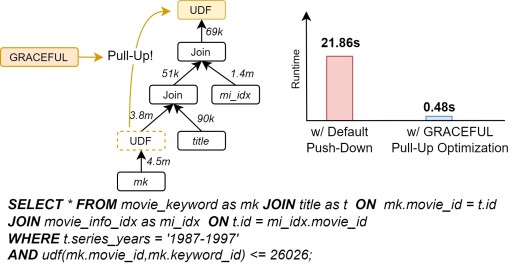

Towards Database Query Optimizers that understand UDFs

April 01, 2025

GRACEFUL: A Learned Cost Estimator For UDFs

Published in ICDE 2025

-

![]()

![]()

Lectures and Courses in the Summer Semester 2025

March 14, 2025

Lectures and Courses of the Systems Group

-

![]()

![]()

Towards High-performance and Trusted Cloud DBMSs – published at Datenbank Spektrum

March 10, 2025

Towards High-performance and Trusted Cloud DBMSs

-

![]()

![]()

“ELEET: Efficient Learned Query Execution over Text and Tables” Accepted to VLDB'25

February 06, 2025

ELEET: Efficient Learned Query Execution over Text and Tables

-

![]()

![]()

“How Good Are Learned Cost Models?” Accepted to SIGMOD'25

February 04, 2025

How Good Are Learned Cost Models, Really? Insights from Query Optimization Tasks

-

![]()

![]()

Systems Group on Bluesky & LinkedIn

January 23, 2025

-

![]()

![]()

CIDR 2025 Best Paper Award

January 20, 2025

“Towards Foundation Database Models” won the CIDR 2025 best paper award

-

![]()

![]()

SecureSphere Accepted to IEEE BigData 2024

November 20, 2024

SecureSphere: Advancing Security and Robustness in Query Processing over Outsourced Data

-

![]()

![]()

GOLAP Accepted to SIGMOD'25

October 24, 2024

GOLAP: A GPU-Accelerated system for high-speed analytics on SSD-resident data

Follow us on Bluesky & LinkedIn!

We are on Bluesky and LinkedIn.

Follow us to get up-to-date information about the Systems-Group@TU-Darmstadt