Multimodal AI Lab Wins Fact-Checking Challenge

2024/12/19

The lab's "InFact" method won a global claim verification competition. Today, the lab unveils the code and releases the stronger, multimodal successor, "DEFAME."

The Multimodal AI Lab at TU Darmstadt has achieved a remarkable milestone by winning first place in a global challenge focused on automating text-only claim verification. Competing against 20 highly skilled teams, the lab’s innovative approach, “InFact,” emerged as the strongest in the challenge.

InFact, primarily developed by Mark Rothermel and Tobias Braun, breaks down the complex task of fact-checking into a multi-step pipeline. At each stage, the system leverages the capabilities of Large Language Models (LLMs) to interpret, decompose, and verify claims with precision. Most importantly, InFact does not primarily rely on the LLM's internal (often unreliable) knowledge but retrieves the necessary evidence from external sources to yield a more trustworthy prediction.

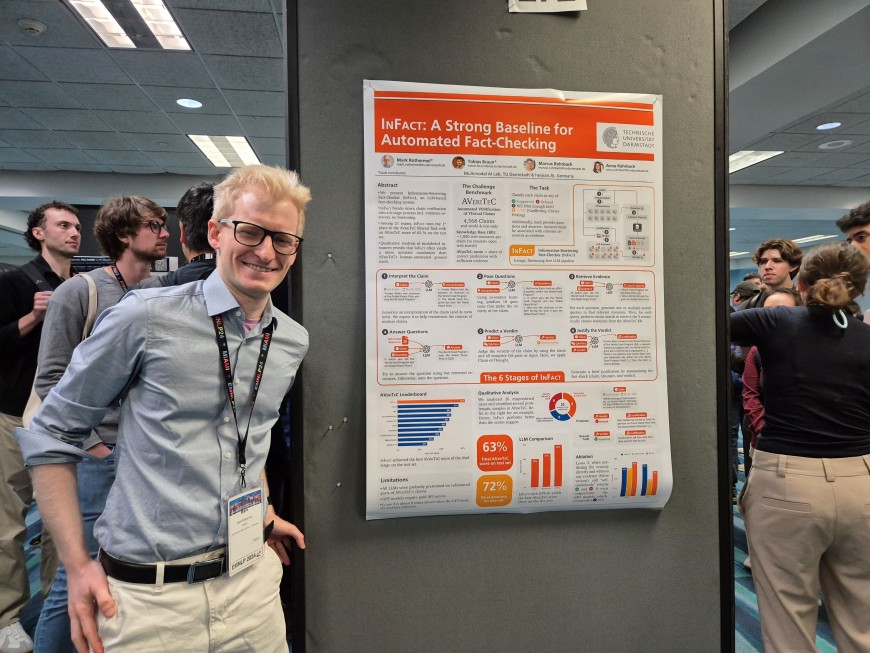

Mark Rothermel presented this cutting-edge work at the EMNLP conference in Miami, Florida, last November (watch his 7 min talk in the live recording, starting at 3:09:30). The Multimodal AI Lab has also made the InFact code publicly available today on GitHub, encouraging the global research community to explore and build upon their work. For those interested in the technical details, the system description paper offers an in-depth look at InFact's architecture and methodology.

Successor Method “DEFAME” Just Released, Too

InFact’s success positions it as the de-facto state-of-the-art method for automated textual fact-checking. However, the team emphasizes that this is just the beginning of their efforts to combat misinformation. Mark and Tobias are already working on a multimodal successor to InFact, which is designed to handle both textual and visual information. This next-generation method, which they call “DEFAME,” aims to address the prevalence of visual misinformation like cheap fakes: pristine photos used out of context to construct false narratives.

In their freshly released under-review preprint, Tobias and Mark report the outstanding success of DEFAME, which beats all previous methods on three different fact-checking benchmarks. Together with InFact, the team released DEFAME's code on GitHub.

The Urgency of Fact-Checking

The need for effective fact-checking methods has never been greater. Artificial Intelligence not only enables the mass creation of fake news and disinformation but also enhances its believability, increasing both the quantity and quality of misleading content. Disinformation has been identified by the World Economic Forum as the largest mid-term risk facing society—even surpassing the challenges of climate change and ongoing geopolitical conflicts. Additionally, human fact-checkers can only deal with a tiny part of fake news. With the release of InFact and DEFAME, the Multimodal AI Lab joins the urgently needed research forefront to automate fact-checking.