Multimodal fact-checking is the task that deals with the extraction and verification of textual and audiovisual claims (e.g. social media posts). We develop state-of-the-art automated systems and build standardized evaluation benchmarks to advance humanity in combating mis- and disinformation.

Highlights

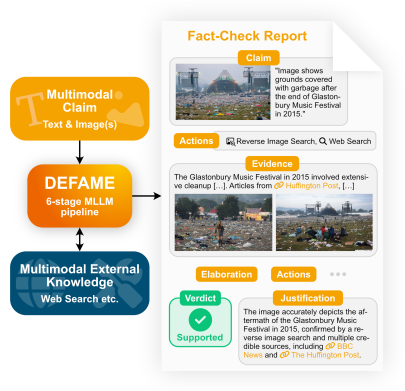

DEFAME is our most recent publication, the first multimodal fact-checking system that can handle both multimodal claims and multimodal evidence. It is the current SOTA on four different benchmarks. Read the paper or check out the GitHub repository.

InFact is the text-only fact-checking system with which we won the 2024 AVeriTeC challenge. We published the details in the system description paper. The code is publicly accessible on GitHub.

Research Lead

Mark Rothermel M.Sc.

Multimodal AI

Multimodal Grounded Learning

Contact

mark.rothermel@tu-...

Work

S4/23 207

Landwehrstr. 50A

64293

Darmstadt

The Problem of Fact-Checking

Multimodal Automated Fact-Checking (MAFC) is the task of verifying claims that incorporate text and (audio)visuals. It encompasses a wide range of subtasks that can be roughly grouped into two supertasks:

Claim Extraction

Given, for example, a social media post, the Claim Extraction supertask aims to distill the factually verifiable, check-worthy, and unchecked claims from the raw content. It involves

- Content Interpretation: Identify the author’s core statement(s) and intent. Ground the content in the available context (who posted it, when was it posted, etc.), resolve the role of contained media (images, video, audio), and handle ambiguities

- Claim Decomposition: Sometimes referred to as “Claim Normalization”. Split the author’s statements into smaller/atomic facts that are easier to handle.

- Claim Filtering: Keep only the statements that are actual claims, i.e., that are factually verifiable. For example, remove all opinions

- Checkworthiness Estimation: Determine the relevance/need for the claims to be fact-checked. Claims vary in terms of impact, dissemination, harmfulness, etc. As we cannot fact-check everything on the web, we need to prioritize checkworthy claims.

- Claim Matching: Compare the claim to a database of already checked claims to avoid redundant fact-checking. This problem involves establishing a metric that efficiently measures the factual similarity of two syntactically different sentences.

Claim Verification

The Claim Verification supertask is the core of fact-checking. The goal is to ground the claim in retrieved evidence and predict its veracity. This involves the following subtasks:

- Evidence Retrieval: Integrate external tools such as search engines, geolocation, chronolocation, person identification, etc. that allow the retrieval of evidence. The retrieval of web sources also requires credibility estimation, evidence filtering/ranking, planning when to effectively and efficiently use which tool, etc.

- Reasoning: Apply inference methods such as Natural Language Inference (NLI) or a Knowledge Graph (KG) to elaborate/evaluate the claim in light of the retrieved evidence.

- Veracity Prediction: Given the retrieved evidence and reasoning, classify the claim’s veracity, typically supported, refuted, or NEI (not enough information).

- Justification Generation: Generate a concise explanation for the result to increase interpretability of the fact-check.

Interested in a Thesis?

Then please apply through our lab’s online application form. If capacity is available, we’ll explore topics for you and see if we can find one that matches your interests and skills.