Paper accepted at top conference on privacy PETS 2022

2022/01/13

RTG researchers Aidmar Wainakh, Ephraim Zimmer and Max Mühlhäuser, in a collaboration with AI&ML group, had their paper titled “User-Level Label Leakage from Gradients in Federated Learning” accepted at one of the top conferences on privacy, the annual Privacy Enhancing Technologies Symposium (PETS) 2022 conference in July 11–15, 2022 Sydney, Australia.

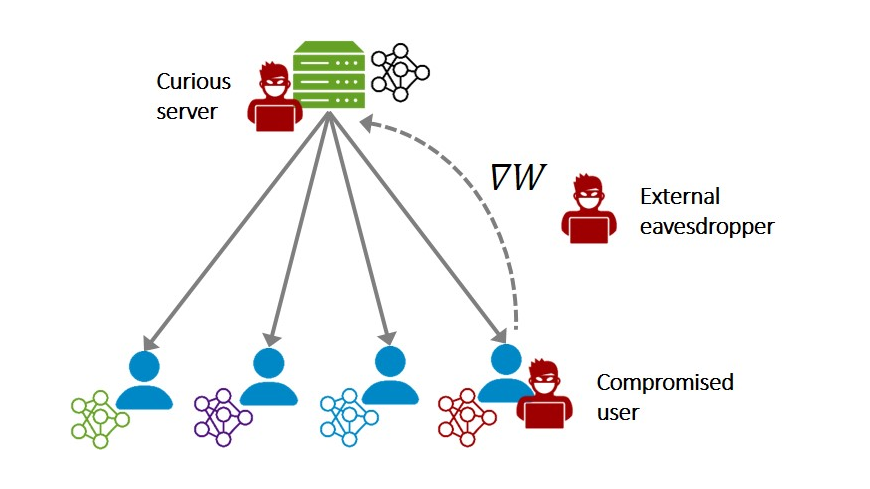

In this paper, RTG and AI&ML researchers propose Label Leakage from Gradients (LLG), a novel attack to extract the labels of the users’ training

data from their shared gradients in federated learning. The attack exploits the direction and magnitude of gradients to determine the presence or absence of any label. They mathematically and empirically demonstrate the validity of the attack under different settings. Moreover, empirical results show that LLG successfully extracts labels with high accuracy at the early stages of model training. They also discuss different defense mechanisms against such leakage.

Citation info:

Aidmar Wainakh, Fabrizio Ventola, Till Müßig, Jens Keim, Carlos Garcia Cordero, Ephraim Zimmer, Tim Grube, Kristian Kersting, and Max Mühlhäuser. User-Level Label Leakage from Gradients in Federated Learning. In the annual Privacy Enhancing Technologies Symposium (PETS) 2022 [to appear].