With the increasing ubiquity of sensors and AI advancements, technology has made learning and knowledge sharing easier. This project aims to explore users' perspectives on trust and privacy in Internet of Things (IoT) learning settings, considering the potential benefits and ethical concerns associated with the collection and analysis of personal data. It emphasizes the need to understand how various factors in IoT environments impact users' perceptions of trust and privacy, and how these factors may vary across different applications and contexts.

In the field of educational research, AI has been utilized for over 25 years in adapting learning content and tasks in e-learning contexts, with recent advancements in AI methods and big data opening greater potential. However, research on IoT sensors in learning settings is still in its early stages, and there is a lack of comprehensive studies exploring users' perspectives on trust and privacy in IoT learning settings.

The main objectives of subarea C.3. involve examining users' perspectives on trust and privacy in IoT learning settings and identifying trustworthy IoT learning agents. The initial part focuses on trust and includes analyzing factors influencing subjective and objective trust through theoretical derivation, supported by empirical data collection with users from various learning settings.

Current PhD project of subarea C.3:

Trust in Digital Learning Agents

-Fransisca Hapsari-

This project investigates the factors that influence learners' trust in pedagogical agents equipped with sensors. Maintaining users' trust is crucial for adoption and engagement, especially when mistakes occur, or privacy rights are violated. Understanding the calibration of trust and the establishment of expectations is essential for aligning them with the agents' reliability.

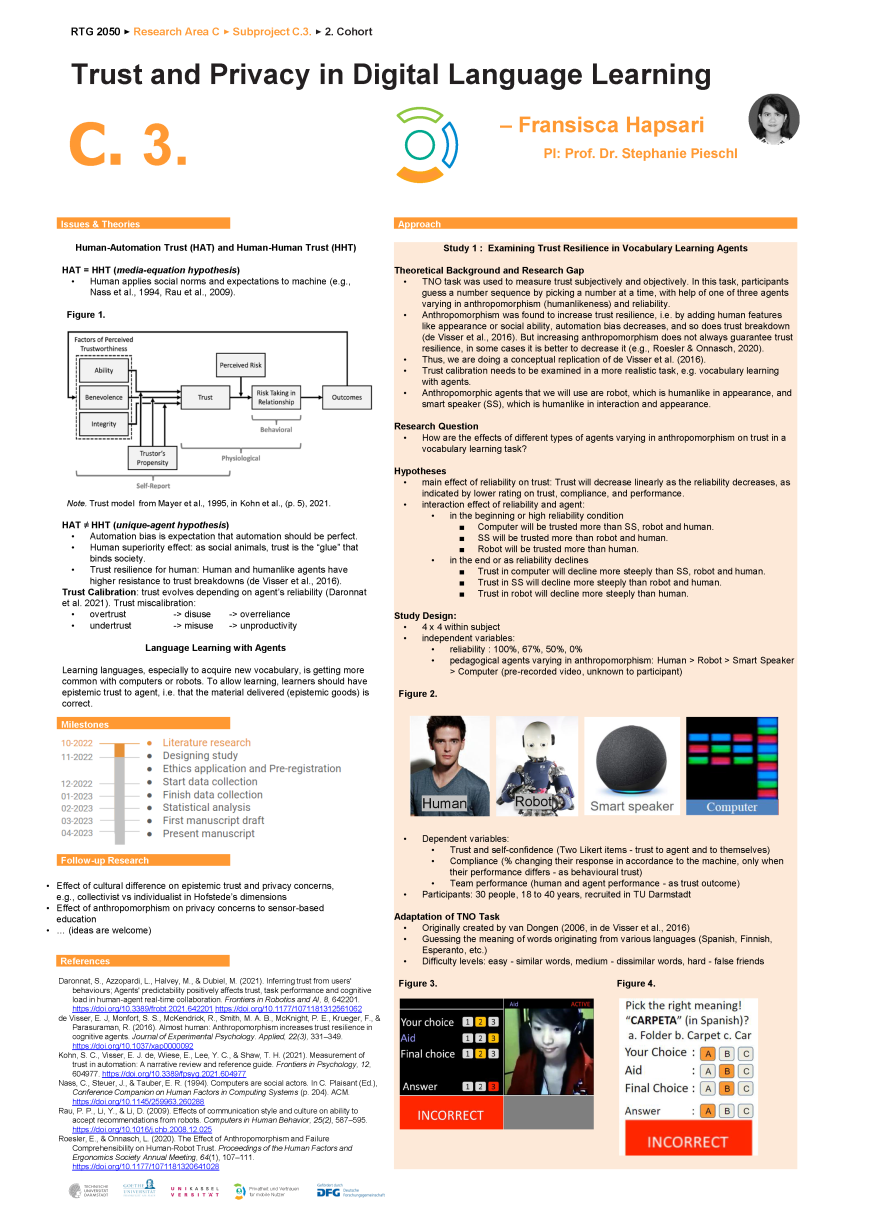

The utilization of anthropomorphism in pedagogical agents raises questions about its implications. While it offers advantages such as convenience and enjoyment, it may introduce potential unreliability or imperfections associated with human-like characteristics. In the first study of this project, we explore the impact of anthropomorphism on learners' trust in digital learning agents.

In general, we use behavioral experiments to explore relationships between system reliability, human-likeness quality, users' trust, and additional factors. By examining how these factors interact and influence trust, we can gain insights into users' trust dynamics and the factors that contribute to trust breakdowns and repairs. Potentially, this may also have implications for learners’ privacy judgments or regulation.

In conclusion, this project investigates pertinent factors for appropriate user trust calibration in digital learning agents. Understanding these factors will contribute to the design of trustworthy learning automation systems. By examining the impact of anthropomorphism, system reliability, and other factors on users' trust, we can enhance the effectiveness of digital learning agents and promote user engagement and adoption.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Stephanie Pieschl | C.3 (Phase II) | pieschl@psychologie.tu-... +49 6151 16-23991 S1|15 122 |

| Fransisca Hapsari | C.3 | fransisca.hapsari@tu-... +49 6151 16-23995 S1|15 126 |