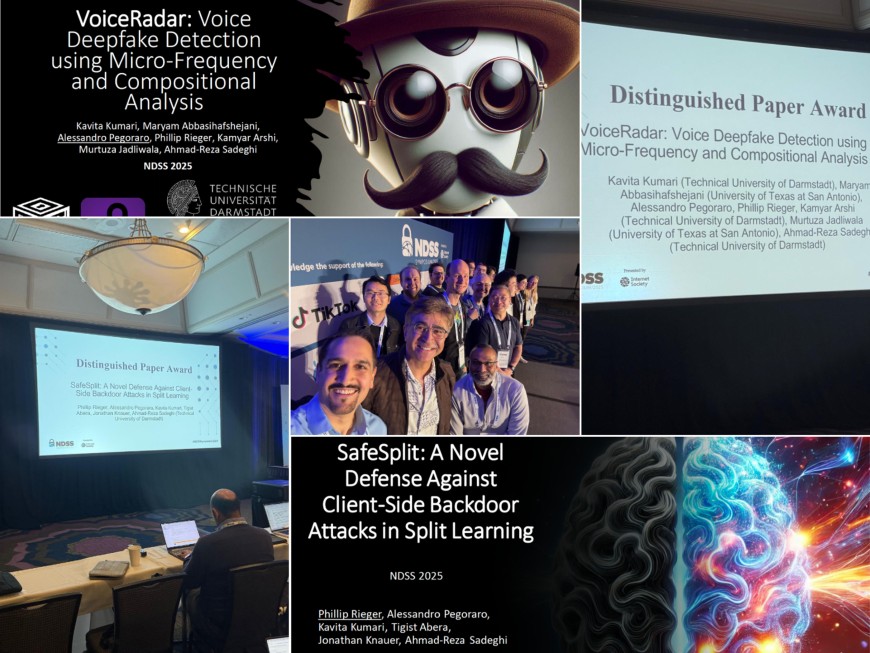

Our Team Wins Two Distinguished Paper Awards at NDSS Symposium 2025 – One of the Top Four Security Conferences in the World!

2025/02/27

Both of our papers, SafeSplit: A Novel Defense Against Client-Side Backdoor Attacks in Split Learning and VoiceRadar: Voice Deepfake Detection Using Micro-Frequency and Compositional Analysis, have received the Distinguished Paper Award at NDSS Symposium 2025!

SafeSplit, presented by Phillip Rieger on the second day, tackles security challenges in Split Learning, where partial model access and sequential training creates unique challenges for backdoor mitigation. We introduce a dual-layer defense mechanism—combining static frequency-domain analysis and dynamic rotational distance metrics—to detect and mitigate client-side backdoor attacks.

VoiceRadar, presented by Alessandro Pegoraro on the first day, introduces a novel approach to detecting deepfake audio, leveraging micro-frequency oscillations and compositional analysis inspired by physical models. By integrating these characteristics into machine learning loss functions, VoiceRadar significantly improves the detection of AI-generated speech, ensuring more robust audio authentication.

Presenting these works at NDSS 2025 was a great experience, and we thank for the insightful discussions with fellow researchers. Also a huge thank you to all co-authors Phillip Rieger, Kavita Kumari, Alessandro Pegoraro, Maryam Abbasihafshejani, Jonathan Knauer, Kamyar Arshi, Tigist Abera, Murtuza Jadliwala, and Ahmad-Reza Sadeghi as well as the NDSS community for their support and engagement!

Looking forward to investigate these topics further and exploring new directions in AI-security and machine learning.