PhD project of subarea A.3:

The Duality of Trust in Human-Artificial Intelligence Learning Partnerships

-Patrick Hendriks-

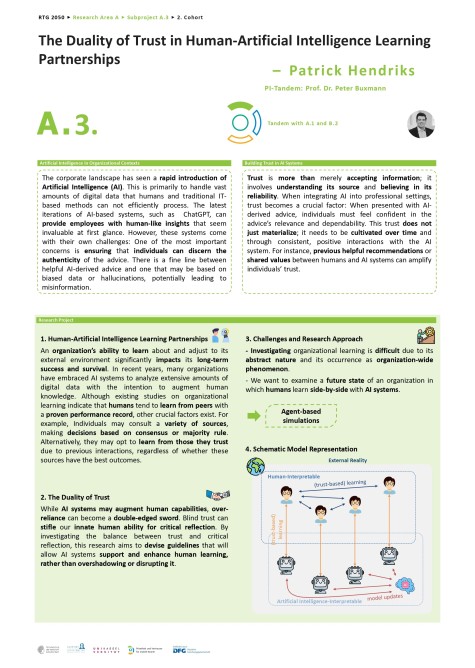

In today's rapidly evolving world, artificial intelligence (AI) plays a critical role, especially in managing vast amounts of digital data that are beyond the capabilities of traditional methods. Advanced AI systems, such as ChatGPT, promise to provide employees with human-like insights, streamline decision-making processes, and offer tailored advice. However, integrating AI into professional environments is not without its challenges. A primary concern is the authenticity of the information provided by AI; distinguishing valuable insights from potentially biased or harmful advice becomes paramount. In this context, trust is not just about accepting AI-generated advice, but understanding and believing in its source and reliability. Ensuring that professionals can fully trust AI-derived advice requires careful cultivation over time, supported by positive, consistent interactions with the system.

The research project addresses two core issues. First, it explores “human-artificial intelligence learning partnerships,” highlighting the critical role of trust and learning in organizational contexts. As organizations increasingly use AI to augment human knowledge, understanding the dynamics of learning becomes critical. Do people learn more from trusted sources than from highly performant ones? Second, the research addresses the “duality of trust,” highlighting the potential pitfalls of over-reliance on AI. A balanced approach, in which trust in AI complements human critical reflection, is the need of the hour. Through a comprehensive study of these issues, the project aims to chart a future where humans and AI coexist and learn together in organizations, leveraging each other's strengths while mitigating potential disruptions.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Peter Buxmann | A.3, B.2 | buxmann@is.tu-... +49 6151 16-24333 S1|02 242 |

| Patrick Hendriks | A.3 |

Subarea A.3, entitled “Building Trustworthy AI Systems in an Era of Data Privacy”, is a critical effort that aims to develop privacy-centric solutions that strike a delicate balance between personalised experiences and privacy, with the goal of increasing trust in Artificial Intelligence (AI). In today's increasingly digital world, where personal data and information is constantly exchanged and scrutinised, the concepts of privacy and trust have become paramount.

AI holds immense potential, with the ability to augment human capabilities, automate mundane tasks, and gain invaluable insights from vast amounts of data. This ability translates into greater efficiency, innovation and more informed decision-making across a range of industries, including healthcare and finance. AI's data-driven algorithms are poised to revolutionise these sectors by providing tailored content, recommendations and services that are tailored to individual preferences, thereby enriching the consumer experience in software and digital services. Nonetheless, it can also engender considerable uncertainty and apprehension among end users, potentially fostering heightened privacy concerns and eroding trust in these technologies.

PhD project of subarea A.3:

Building Trustworthy AI Systems in an Era of Data Privacy

-Anne Zöll-

The central objective of this project within subarea A.3 is to embed privacy-centred principles in AI-driven technologies. This includes the development and implementation of robust privacy policies that ensure the transparent handling of personal data. As a result, the protection of personal data will be strengthened and end users will have more control over their information.

In addition, the project seeks to increase end user confidence in the technology. This will be achieved through improved communication about the inner workings of AI systems, how they manage data, and a commitment to consistent, error-free performance. Transparency will be a guiding principle, with clear explanations explaining how algorithms work and how data is used.

In the grand scheme of things, the project focuses on cultivating a positive and user-friendly experience with technology. This includes optimising user interfaces, minimising errors and providing efficient solutions tailored to end user needs. The user is at the forefront of both design and delivery, with the ultimate aim of creating intuitive, user-friendly interfaces and experiences.

By addressing emerging privacy concerns and increasing trust in the technology, subarea A.3. aims to bring about a significant upsurge in the adoption of AI-driven products and services. This in turn will usher in a new era of technology use, where individuals can confidently harness the power of AI, knowing that their privacy is protected and that the technology they use is seamlessly aligned with their needs and expectations.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Peter Buxmann | A.3, B.2 | buxmann@is.tu-... +49 6151 16-24333 S1|02 242 |

| Anne Zöll (parental leave) | A.3 | anne.zoell@tu-... |

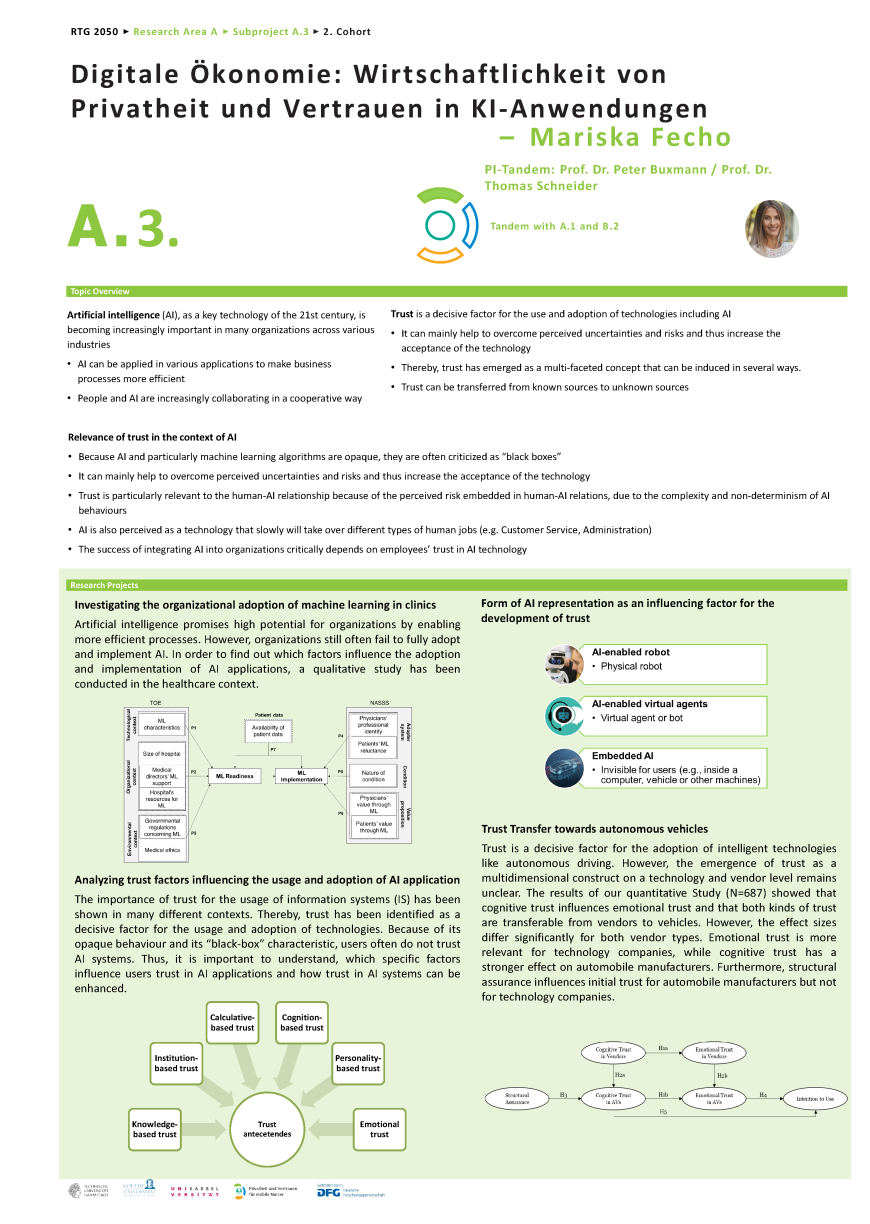

The subarea A.3, “Economics of privacy and trust in artificial intelligence applications”, aims to investigate the influence of users’ trust in artificial intelligence (AI) applications. AI as a general-purpose technology has recently found its way into businesses and organisations. Being capable of understanding and learning from external data, AI and particularly machine learning (ML) promise enormous advantages for users and organisations. Thus, ML methods are becoming increasingly important in the digital economy. However, many ML algorithms perform like a black boxes, whose results are not precisely comprehensible. Consequently, users have concerns and distrust towards ML-based applications. The sub-project A.3. investigates the application of AI and ML-based systems in the economy to understand users' attitude and behaviour towards AI (e.g. analysing factors for the implementation and usage of AI applications). In addition, trust in AI systems shall particularly be investigated by considering a multi-layer perspective of trust. Thereby, factors influencing users' trust in AI systems shall be identified and measures for increasing this trust shall be elaborated.

Current PhD project of subarea A.3:

Potentials and Challenges for the Adoption of Artificial Intelligence – An Economic Investigation of Trust in Artificial Intelligence

-Mariska Fecho-

Artificial intelligence (AI), as a key technology of the 21st century, is becoming increasingly important in many organizations across various industries. Thereby, AI can be applied to different fields of activity to make business processes more efficient. With the availability of large amounts of data and increased computing capacity, machine learning (ML), a sub-field of AI, has gained more attention. ML enables computers to learn specific tasks from data and make predictions based on that data. However, AI and machine learning algorithms are often criticized for their black box behavior, as they do not provide information about how they came to a particular result.

Trust is a decisive factor for the use and adoption of technologies. It can mainly help to overcome perceived uncertainties and risks and thus increase the acceptance of the technology. Thereby, trust has emerged as a multi-faceted concept that can be created or induced in several ways. Therefore, the research of area A.3 aims to investigate relevant trust factors for the adoption and usage of AI-based applications. In particular, concepts and dimensions of trust for adopting and using AI-based applications shall be investigated. Moreover, it has been shown that transparency can increase trust in technologies. Thus, the specific factor of transparency shall be further investigated in the context of AI.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Peter Buxmann | A.3, B.2 | buxmann@is.tu-... +49 6151 16-24333 S1|02 242 |

| Mariska Fecho (parental leave) | A.3, Tandem: A.1, B.2 | mariska.fecho@tu-... +49 6151 16-24321 S1|02 237a |