Research subarea B.2 focuses on privacy and empowerment of users through added value in collectives.

The first goal here is to use collective machine learning (ML) for generating added value within digital collectives.

To assess the influence of users' data on ML models, the second goal is to introduce key privacy figures for ML models that are assessable within digital collectives.

Third, hybrid apps for digital collectives provide the basis for collective ML.

The fourth goal aims at establishing trust in apps and services by transparency in order to empower users.

Current PhD project of subarea B.2:

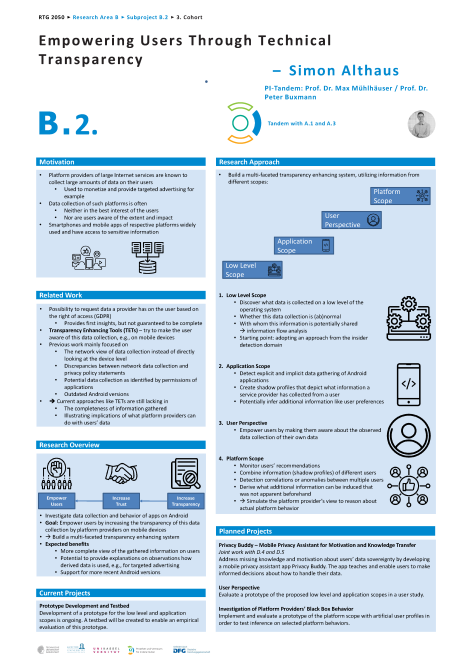

User Empowerment Through Technical Transparency

-Simon Althaus-

Platform providers of large Internet services such as online social networks are known to collect large amounts of data on their users that is monetized and used to provide targeted advertising for example. The data collection of such platforms is often neither in the best interest of the users nor are users aware of the extent and impact of this data collection.

A user could exercise his right of access according to the GDPR in order to request the data that a provider has on the user. This would yield a first insight into the user data stored at providers, however it is not guaranteed to be complete or even applicable outside the EU. Related work proposed transparency enhancing tools (TETs) that try to make the user aware of this data collection on mobile devices. On the one hand, this previous work mainly focused on the network view of data collection instead of directly looking at what’s happening on the device level. Some work also looked at discrepancies between this network data collection and privacy policy statements. On the other hand, previous work considered the potential data collection as identified by the granted and accessed permission of applications. However, this only

reveals what data apps could have accessed in the worst case, not what was actually collected and is subsequently used by platform providers.

As such, current approaches like TETs are still lacking in the completeness of information gathered and in illustrating implications of what platform providers can do with such information. Thus, this subproject B.2 of the Research Training Group (RTG) 2050 focuses on empowering users by increasing the transparency of this data collection by platform providers on mobile devices. Among the expected benefits of this approach in comparison to previous work are a more complete view of the gathered information on users and the potential to provide explanations on observations how derived data is used, e.g., for targeted advertising.

For this, a TET is proposed that utilizes information from different levels: First, by adopting an approach from the insider detection domain, we discover what data is collected on a low-level of the operating system, whether this data collection is (ab)normal and with whom this information is potentially shared. Second, on the application level, shadow profiles are created that depict what information a service provider has collected from a user. Third, data from the network level may be used to complement information. Finally, the information of different users is combined in order to simulate the platform provider’s view and derive what additional information can be induced that was not apparent beforehand. For that purpose, machine learning techniques such as federated learning will be investigated to facilitate the approach in a privacy-preserving manner and potentially improve it.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Max Mühlhäuser | B2, D4 | max@tk.tu-... +49 6151 16-23200 S2|02 A114 |

| Simon Althaus | B.2, Tandem: A.1, A.3 | althaus@tk.tu-... +49 6151 16-20108 S2|02 A121 |

Previous PhD project of subarea B.2 (Phase II)

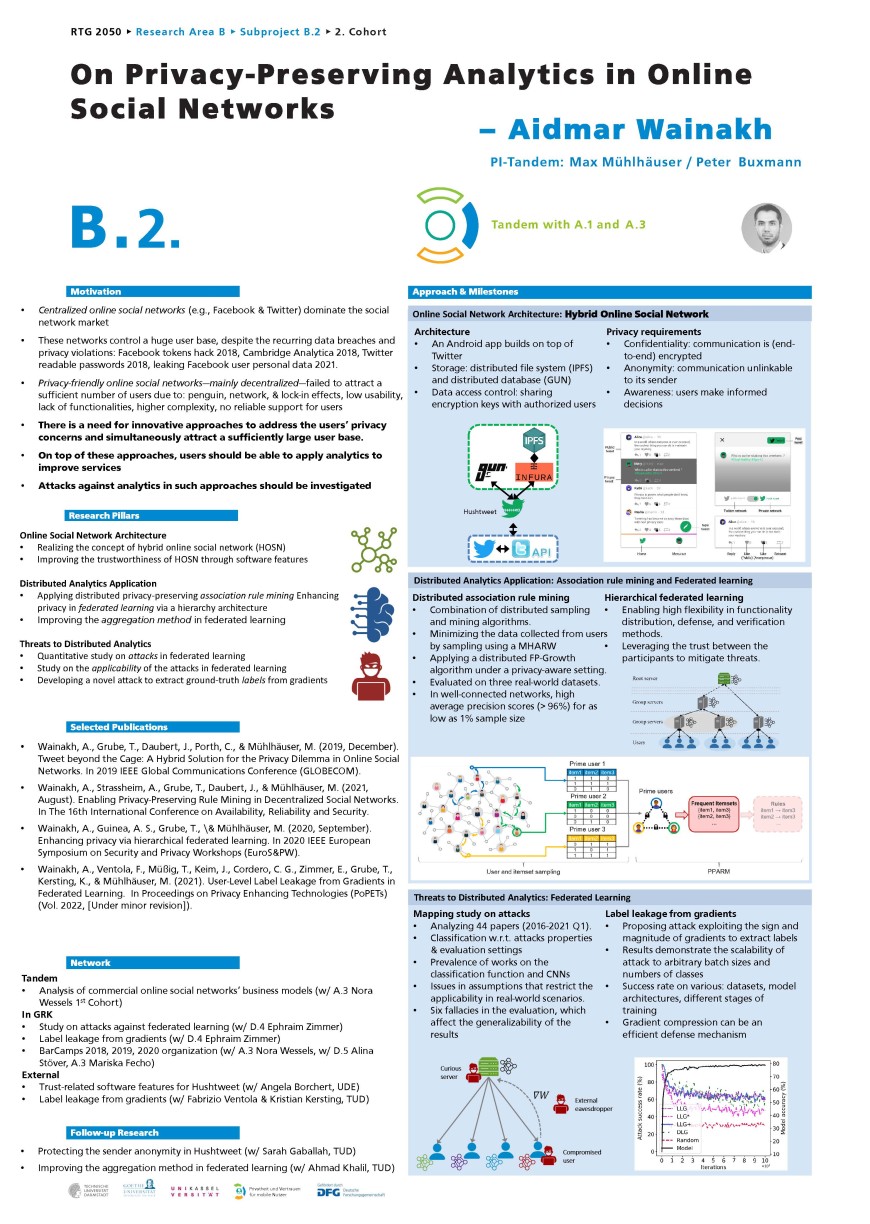

On Privacy-Enhanced Distributed Analytics in Online Social Networks

-Aidmar Wainakh-

The dominant OSNs (e.g., Facebook and Twitter) are commercial; the service providers of OSNs use the data of the users to generate revenue, mainly by realizing targeted advertisements. As of today, the mainstream of OSNs is realized in a centralized manner. That is, all kinds of data shared by users or generated by users’ interaction are controlled by a central provider and stored in their infrastructures. Unfortunately, the providers show consistently insufficient commitment to user data and privacy protection. Oftentimes, user data is used without informed consent or misused in various ways. The providers frequently disclose various forms of user data to third parties, e.g., data brokers. Furthermore, user data was prone to unauthorized access on many occasions, e.g., Twitter readable passwords 2018, and leaking Facebook user personal data 2021. Some parties violated the terms of use of the OSNs and harvested user data for suspicious purposes, such as Cambridge Analytica. The privacy of users in centralized online social networks (COSNs) is constantly and seriously threatened or even violated considering the aforementioned issues.

Within subproject B.2 of RTG 2050, we focus on enhancing the privacy aspect in OSNs through three research pillars:

Online Social Network Architecture

• We propose the concept of hybrid online social networks (HOSNs), which combines the usage of COSNs and decentralized OSNs, by that users benefit from both the market penetration of COSNs and the privacy advantages of decentralized OSNs. Users can post their public content to the COSN, while sharing their private content only with their friends through the decentralized OSN beyond the knowledge of the service providers.

• Understanding the user perception of HOSN is crucial to calibrate the development of the concept. That is, we study the relationships between four aspects that influence users’ perception and behavior: privacy concerns, trust beliefs, risk beliefs, and the willingness to use. In the light of the relationships between these aspects, we develop software features to address users’ privacy concerns, increase trustworthiness, and increase willingness to use.

Distributed Analytics Application

• Recommender systems are essential to improve services in OSNs. Several analytic techniques can be used to generate recommendations. We work on the Association Rule Mining (ARM) technique. We enable efficient privacy-preserving ARM on distributed data. To achieve that, we combine graph sampling and distributed ARM algorithms.

• We look into enhancing the privacy of the emerging distributed machine learning technique, Federated Learning (FL). In particular, we explore the privacy benefits of applying FL in a hierarchy architecture, where the aggregation of the updates happens in multiple layers through the hierarchy.

Threats to Distributed Analytics

• We study the attacks against FL. For that, we identify the foci and gaps in the research literature. We point out issues in the assumptions and evaluation setups commonly used by researchers, and their implications on (1) the applicability of the proposed attacks and (2) the generalizability of the conclusions.

• The labels of user data can be of high sensitivity, e.g., in medical applications. We highlight the information leakage risk of sharing gradients in FL by investigating novel attacks that extract ground-truth labels from gradients.

| Name | Working area(s) | Contact | |

|---|---|---|---|

| Prof. Dr. Max Mühlhäuser | B2, D4 | max@tk.tu-... +49 6151 16-23200 S2|02 A114 |

| Dr. Aidmar Wainakh | B.2 | wainakh@tk.tu-... +49 6151 16-20108 S2|02 A 313 |